🧠 The Power of Language Models (and Why Prompts Engineering Matter)

Large language models (LLMs) like GPT-4, Claude, and Gemini aren’t magic, but they’re pretty close. They can write essays, debug code, summarize research, translate across languages, and even reason through logic puzzles. But here’s the catch: you only get great results if you know how to ask.

That’s where prompt engineering comes in.

Prompt engineering is the art (and science) of crafting inputs to get the outputs you want. It’s a new kind of interface design — but instead of buttons and menus, you’re designing conversations with a model trained on half the internet.

✍️ How to Talk to a Language Model (So It Actually Listens)

Prompting a large language model is a bit like giving instructions to a very smart but very literal assistant. Say too little, and it makes wild guesses. Say too much of the wrong thing, and it wanders off course. The secret? Follow two core principles:

- Write clear and specific instructions

- Give the model time to “think”

Prompting isn’t about writing one-liners — it’s about designing thoughtful instructions that guide the model toward your goal. If you treat prompts like interfaces, you’ll start to see what’s really possible.

🧱 Principle 1: Be Clear, Be Specific

🔹 Tactic 1: Use Delimiters

You can’t just dump unstructured text into a prompt and expect the model to know what’s what. Use delimiters like triple backticks (“`), quotes, XML-like tags, or even emojis to tell the model where the input lives.

Summarize the text between triple quotes:

"""A group of penguins..."""This small addition massively boosts reliability, especially in longer prompts.

🔹 Tactic 2: Ask for Structure

Want your output in a spreadsheet? JSON? Markdown? Ask for it.

Provide your answer as a JSON object with keys: summary, sentiment.Models love structure. Giving them a format isn’t just helpful — it’s a productivity cheat code.

🔹 Tactic 3: Ask Conditional Questions

You can also ask the model to analyze text and act only if certain conditions are met. Want to know if a paragraph contains instructions? Don’t just ask for a summary — tell the model to return steps only if they exist. Otherwise? “No steps provided.”

📦 You’re not just prompting — you’re writing control flow for AI.

🔹 Tactic 4: Few-Shot Prompting

LLMs learn by example. Feed in a couple Q&A pairs or sample formats, and they’ll continue in the same style. You can essentially “train” behavior in real-time, without any fine-tuning or custom model work.

🧠 Principle 2: Give the Model Time to Think

🔹 Tactic 1: Break Down the Task

Instead of one huge ask, give the model a step-by-step breakdown.

For example:

1. Summarize this story

2. Translate it to French

3. Extract names

4. Output as JSONJust like humans, models do better when they know what’s expected at each stage.

🔹 Tactic 2: Ask It to Solve Before Judging

If you’re asking a model to evaluate someone else’s answer (like a student’s math problem), don’t just ask “Is this right?” First, ask the model to solve it itself, then compare the result. This technique is part of what’s known as chain-of-thought prompting — and it leads to far better logical accuracy.

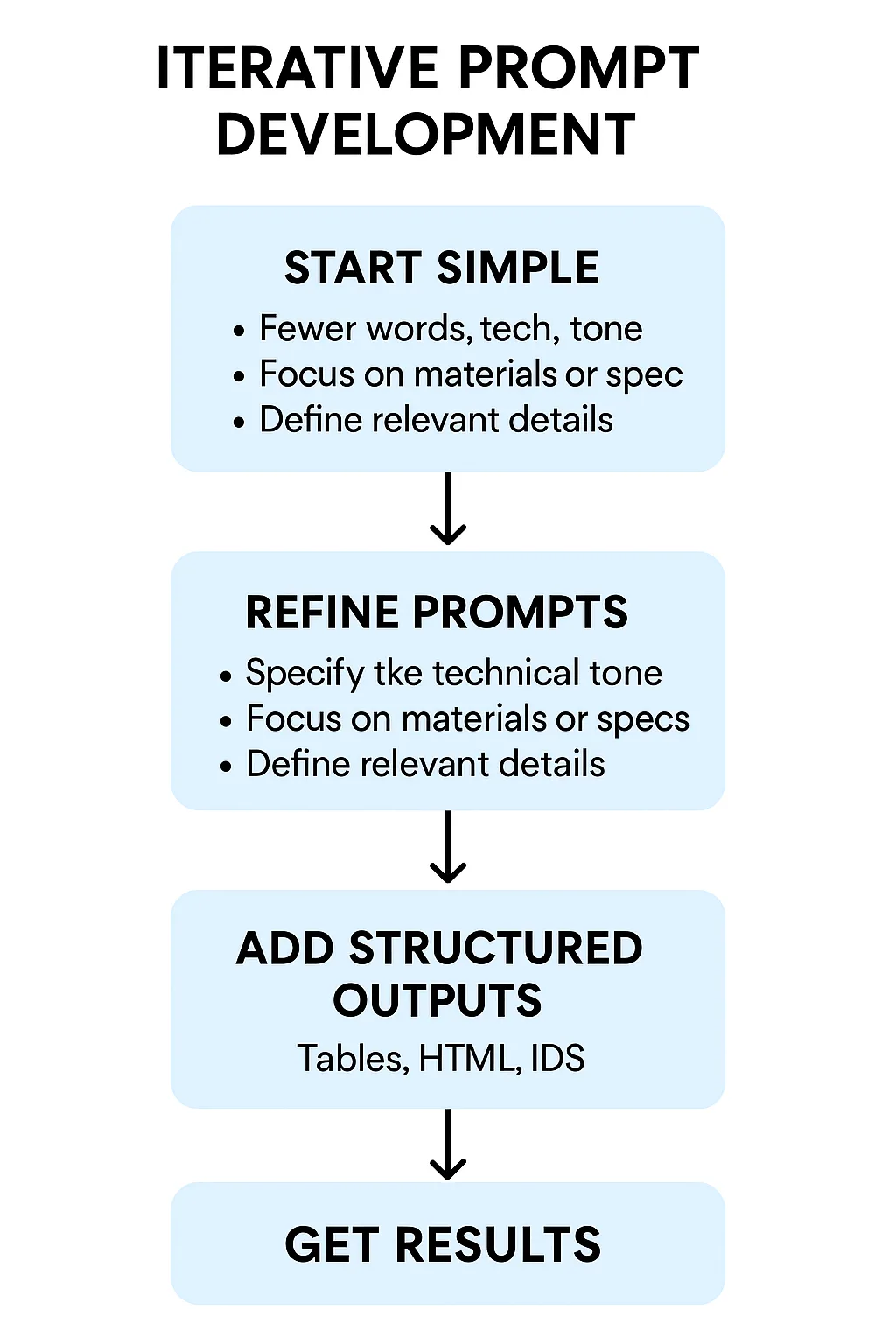

🔁 Iterative Prompting: How to Refine Your Way to Better AI Output

Prompting isn’t a one-and-done kind of thing. It’s not about writing the perfect input on your first try. In reality, getting a useful response from a language model is often a process of incremental refinement — much like debugging code, tuning a UI, or iterating on a product feature.

This is where iterative prompt development comes in.

Let’s say you’re trying to turn a dry technical spec sheet into snappy product marketing copy. The model will likely give you something, but not always the right thing. So you iterate. Tweak. Rethink. Repeat.

Here’s how it plays out:

🪄 Step 1: Start Simple

You feed the model a big blob of specs and ask for a description. Boom — it writes a paragraph. But it’s too long, includes fluff, and highlights the wrong features.

So you ask for:

- Fewer words (e.g., “use at most 50 words”)

- A more technical tone

- A focus on materials or specs instead of marketing buzz

With each revision, the result gets closer to what you need.

🧠 Lesson: Be specific about what to include, what to leave out, and who the audience is.

🧮 Step 2: Add Structured Outputs

Need dimensions as a table? Just ask. Want it in HTML? You got it. Need to pull product IDs out of a document? Yep, you can do that too.

Example instructions you might use:

Include a table of dimensions with columns: Name, Inches. Title the table 'Product Dimensions'.

Wrap the description in a <div>.The result: ready-to-use content you can drop straight into a website or CMS.

💡 This is one of the most underrated tricks in prompt engineering: get the model to format things for machines, not just humans.

💡 Why Iterative Prompting Matters (More Than You Think)

In the world of AI prompting, iteration is the key to control. It’s how you move from generic responses to outputs that are tailored, structured, and ready to deploy. It mirrors the way we already build software: test, refine, ship. You wouldn’t write code without debugging — why treat prompts any differently?

Here’s why this skill really matters:

- It transforms AI from a novelty into a real tool. Most people stop at “good enough” output. But with just one or two more iterations, you can turn generic blurbs into production-ready content, structured JSON, or even front-end HTML.

- You learn how to communicate clearly — not just with the model, but with people. Prompting forces you to clarify your own intent, which sharpens your thinking overall. You become more precise, not just in your prompts, but in your goals.

- It’s how you scale AI inside real workflows. Want to automate documentation, generate marketing content, summarize reports, or extract data? Iterative prompting is how you go from “cool demo” to a repeatable, reliable system.

- It saves time in the long run. The few extra minutes you spend improving your prompt often save you hours of post-processing or cleanup. Great prompts reduce friction downstream.

Think of prompting like prototyping. Your first draft is just the beginning — the real value is in the edits.

✂️ Smart Summarizing: Directing LLMs to Focus on What Matters

Summarization isn’t just about shortening content — it’s about zooming in on the signal and cutting out the noise. Large language models (LLMs) like GPT-4 can do that incredibly well — if you tell them what to focus on.

This section is about teaching LLMs to summarize with purpose. Whether you’re extracting insights for customer support, product teams, or marketing, the key is to guide the model with your goals in mind.

🎯 Not All Summaries Are the Same

Let’s say you’re working with an e-commerce product review. Here’s how the outcome can change dramatically based on how you prompt:

- Generic Summary:

“A cute plush toy that arrived early and was well-received.” - Shipping-Focused Summary:

“The toy arrived earlier than expected, providing a positive delivery experience.” - Price-Focused Summary:

“Reviewer felt the plush toy was small for the price and noted better value may be available elsewhere.”

🧠 Want better summaries? Get specific about your audience and use case.

🛠️ Prompt Tip: Use “Extract” When “Summarize” Is Too Broad

Sometimes the model adds fluff — or mentions things unrelated to your focus (like summarizing delivery when you only care about pricing). In those cases, swap “summarize” for “extract”.

Extract the information related to shipping and delivery in no more than 30 words.This tiny shift in instruction tells the model to filter, not rephrase.

📦 Summarizing at Scale: Multiple Reviews, One Style

Need to scan hundreds of customer reviews? You can batch them into short, consistent summaries with structured prompts:

Summarize each review in at most 20 words.You’ll get back fast insights like:

- “Arrived quickly but smaller than expected.”

- “Fast delivery, responsive customer service, easy setup.”

- “Good deal at $50, but small brush head.”

- “Was on sale, now overpriced. Motor degraded over time.”

🔄 This kind of bulk summarization is perfect for dashboards, sentiment analysis, or feedback loops across teams.

💡 Why Focused Summarization Matters

Summarization isn’t about shrinking text — it’s about amplifying relevance. In a world drowning in content, focused summarization is how you turn unstructured noise into signal you can act on.

Anyone can generate a TL;DR — but product teams don’t need summaries about everything. They need targeted insight: what customers say about price, delivery, or quality.

When you train your prompt to match your objective, AI becomes a real-time insight engine.

| Prompt | Goal | Sample Output |

|---|---|---|

| Summarize the review in 30 words. | Generic overview | “Cute and soft panda plush toy, well-loved by the child, arrived early, but smaller than expected for the price.” |

| Summarize the review focusing on shipping and delivery. | Feedback for logistics team | “Product arrived a day early, giving the buyer a chance to preview it before gifting.” |

| Summarize the review focusing on price and value. | Feedback for pricing team | “Reviewer felt it was a bit small for the price and considered other better-value alternatives.” |

| Extract information about shipping and delivery (max 30 words). | Precision filtering (logistics use) | “Arrived a day earlier than expected; customer was pleased with the delivery timing.” |

| Summarize each review in 20 words (bulk processing). | At-scale review mining | “Small for the price, but soft, cute, and delivered early. A hit with the child.” |

🧠 Let the Model Think: Using LLMs for Inference

So far, we’ve seen how LLMs can summarize or rephrase. But they can also think. Not in the human sense — but in a way that’s useful: they can infer information from unstructured text.

Inference lets you use a language model like a mini analytics engine: it can classify, detect tone, extract metadata, or identify topics — all without traditional training, labeling, or pipelines.

This unlocks some serious power.

🧭 What is “Inference” in Prompt Engineering?

It’s when you ask the model to:

- Detect sentiment (Is this positive or negative?)

- Identify emotions (happy, frustrated, grateful…)

- Extract entities (What’s the product? Who’s the company?)

- Spot topics (What’s this article really about?)

- Do multi-task classification (All of the above in one shot)

- Flag tone (angry? sarcastic? enthusiastic?)

All of this is done with a single prompt. No model fine-tuning. No training data. Just smart instructions.

🧪 A Few Real Use Cases

✅ Spot the Vibe (Sentiment + Emotion)

Want to know how someone feels about your product? Ask the model to detect sentiment (positive/negative) and infer mood. For example:

Identify the sentiment and list up to 3 emotions expressed by the writer.

This is a great fit for customer support analysis, review moderation, or social listening.

🔍 Pull Out the Details (Entity Extraction)

Let’s say you’re sifting through customer feedback and want to structure it:

From this message, extract the product name and company. If not mentioned, return ‘unknown’.

Now you’re feeding clean data into dashboards or internal tools — no regex, no scraping, no manual tags.

🧠 Multi-Faceted Understanding

You can chain several requests in one go. For example:

Classify sentiment, detect frustration, extract product name and brand, and return the result as a JSON object.

That’s a whole analytics pipeline, done in one LLM call.

🗞️ Topic Detection in Articles or Reports

Trying to summarize news, research, or internal updates? You can ask:

List 5 key topics discussed in this article. One or two words each.”

Then follow up with:

Check if each of these topics is present in the article and return a list of booleans.

You just built a custom content filter — great for alerts, feeds, or dashboards.

🧨 Think Before You Build: Why LLM Inference Changes Everything

Most people think of large language models (LLMs) as content generators — tools that write blogs, emails, or code. But one of their most powerful, underused capabilities is inference: the ability to understand and extract meaning from human language. With the right prompt, you can get an LLM to detect sentiment, identify emotions, extract product names or brands, classify tone, and even surface the main topics in a document — all without any training data, labeling, or custom pipelines.

This flips the traditional NLP workflow on its head. Instead of building classifiers or setting up multi-stage analysis tools, you just describe what you want. Want to process support tickets, scan news articles for mentions of your company, or analyze customer reviews for signs of frustration? You don’t need a new model — you just need the right question. Inference lets LLMs act as lightweight, flexible, zero-setup analytics tools. It turns unstructured language into clean, actionable data — instantly. And once you realize that, you stop seeing LLMs as content creators and start using them as insight engines.

- ⚙️ No training: You don’t need labeled data or custom models — just prompts.

- ⏱️ Fast iteration: Add new insights with a few lines of text, not weeks of dev time.

- 🧩 Flexible use cases: One model, many tasks — classification, extraction, filtering, summarizing.

- 🚀 Scales easily: Works on support tickets, reviews, emails, articles — anything with text.

🔧 Text Transformers: The Swiss Army Skill of Prompt Engineering

Large Language Models aren’t just good at generating new content — they’re also brilliant at transforming existing text into new forms, tones, languages, and formats. Think of it as turning language into code: you feed in raw input and tell the model how to reshape it.

This makes LLMs one of the most flexible tools in your workflow. Whether you need to rewrite clunky text, translate content across languages, shift tone for different audiences, or clean up data formats, a well-crafted prompt gets you there — no plugins, no APIs, no context switching.

🌍 1. Language Translation (and Then Some)

At its core, translation is the most obvious transformation — and LLMs handle it impressively well. Thanks to their multilingual training data, they can fluidly switch between dozens of languages: Spanish, French, Chinese, Korean, German — and yes, even pirate English if you ask.

But what makes LLM translation more than just a Google Translate clone is that you can control the tone and audiencetoo. Want a formal version in Spanish for business use? An informal one for a social post? A side-by-side version for learning? Just describe it in your prompt.

This alone unlocks huge possibilities for global products, multilingual support, and content localization.

🎯 2. Tone Transformation: From Casual to CEO

Language isn’t just about what you say — it’s how you say it. That’s where tone transformation shines.

LLMs can rewrite:

- Slang into business emails

- Overly formal content into plainspoken instructions

- Friendly messages into legal disclaimers

- Marketing copy into technical documentation

This is ideal when you’re writing for multiple personas or channels — say, explaining the same concept to both customers and executives. Instead of rewriting it yourself, you just prompt the model to match the tone you need.

Tone-shifting means one voice can become many — without rethinking your message.

🧹 3. Grammar, Style, and Proofreading (That Doesn’t Feel Robotic)

Forget simple spellcheck. LLMs can act as full-on editing assistants. They correct grammar, improve clarity, catch homonym errors, and rewrite awkward sentences — even in nuanced, long-form content. Better yet, you can guide them:

- “Make it more compelling.”

- “Rewrite according to APA style.”

- “Polish this for a professional audience.”

This is especially helpful for non-native speakers, junior writers, or teams without an editor on staff.

Suddenly, clean, compelling writing is accessible to everyone — regardless of skill level.

🔄 4. Format Conversion: Natural Language ETL

One of the most underrated LLM skills is reformatting. You can turn:

- JSON into an HTML table

- Text into Markdown

- Tables into lists (or vice versa)

- Raw descriptions into structured metadata

No Python scripts. No regex gymnastics. Just describe the input and what you want the output to look like.

Convert this list of employees in JSON to an HTML table with a title and headers.Done. Instant, readable, styled content — ready for web or docs.

This is perfect for internal tools, CMS automation, low-code apps, and product prototyping.

💡 Why Text Transformation Matters

We often think AI’s strength is creativity — but its superpower might be clarity.

Text transformation solves some of the messiest, most expensive bottlenecks in modern work:

- Rewriting content for different audiences

- Converting formats between systems

- Polishing or localizing raw input

- Getting the right tone, the right structure, and the right voice — automatically

And most importantly, it works at scale. A single transformation prompt can process 10 or 10,000 items. No new infrastructure. No waiting on external tools. Just a better way to shape your message.

In a world where communication is everything, transformation is how you go from good to great — fast.

✉️ Expanding with Empathy: Auto-Generated Replies That Actually Sound Human

One of the most useful — and underused — abilities of language models is expanding. That is, taking a short or raw piece of text and turning it into something more polished, expressive, or complete. In customer service, this translates into one huge advantage: automated responses that actually sound thoughtful.

LLMs like GPT-4 can read a customer review or email, detect its tone, pick out specific concerns or praise, and generate a custom, empathetic reply — all based on a simple prompt.

💬 From Review to Response in One Prompt

Imagine you’re handling customer feedback for a product that’s getting mixed reviews. A typical chatbot might reply with “Thanks for your feedback” and move on. But with the right prompt, an LLM can generate something much smarter:

- If the review is positive: it responds with personalized gratitude and highlights what the customer liked.

- If the review is negative: it acknowledges specific concerns and offers a path to resolution — without sounding like a template.

Here’s the magic: all you need to do is tell the model:

- The review

- The sentiment (which you can infer using another prompt)

- That you want a tailored, polite, professional response

And it delivers. No templates. No rule-based system. Just a model that understands language and intent.

🔁 Why This Works So Well

1. It responds with specificity, not boilerplate

The model doesn’t just say “sorry” — it refers to actual points from the customer’s message, like pricing changes, motor issues, or past experiences with the brand. This makes it feel personal, not robotic.

2. It scales effortlessly

Whether you have 10 or 10,000 customer emails, this prompt pattern lets you respond with nuance — without hiring an army of agents. You can even set it to vary tone, length, or style depending on the channel (email, chat, or internal notes).

3. It fits perfectly with other prompt workflows

Pair this with previous skills like inference (to detect sentiment) and transformation (to adjust tone or translate) and you’ve got a full pipeline:

→ Read the review → Detect tone → Write a reply → Transform to proper tone or language.

💡 Why Expanding Matters

This capability isn’t just useful — it’s strategic. In a world where companies are judged by their communication and empathy, being able to instantly generate human-sounding, issue-aware responses gives you a huge edge. And not just in customer service:

- Product teams can expand bug reports into reproducible issue summaries.

- Marketers can turn short feedback into testimonials.

- Ops teams can turn messy notes into formal reports.

Text expansion is what happens when LLMs don’t just react — they respond.

💬 Conversational Context: Building Smarter AI with the Chat Format

So far in this series, we’ve explored one-shot prompts — clean input, clean output, done. But some of the most powerful AI use cases come from ongoing conversations, not single interactions. That’s where the chat format comes in.

By structuring prompts as a series of messages with roles (system, user, assistant), you unlock persistent, memory-aware exchanges. This is how we go from “question-answer” to context-aware assistants — tools that feel more like collaborators than just bots.

✈️ Example: A Travel Concierge Bot

Let’s say you’re building an AI assistant to help users plan trips. You don’t want it to just spit out “top 5 cities.” You want it to:

- Ask follow-up questions

- Remember user preferences

- Suggest itineraries based on past answers

- Adjust tone depending on the audience

That’s all possible — without code, just prompt design — using the chat format.

Here’s a sample conversation using structured roles:

[

{"role": "system", "content": "You are a helpful travel concierge that specializes in international travel planning."},

{"role": "user", "content": "I want to go on a vacation in May."},

{"role": "assistant", "content": "Great! Do you prefer beach, city, or nature destinations?"},

{"role": "user", "content": "Somewhere with history and great food."},

{"role": "assistant", "content": "In that case, consider Lisbon or Istanbul. Both offer rich history and vibrant cuisine. Want hotel or flight suggestions next?"}

]

Now the model is building an experience — not just a reply. It’s guiding the user, remembering context, and shaping the flow like a real agent.

🧠 Memory via Message History

The model doesn’t “remember” in the traditional sense. But when you provide the conversation history as part of the prompt, it acts like it does. Every turn builds on the last.

Want it to recall preferences like dates, interests, or budget? Just keep them in the chat messages.

You can even extract structured summaries at the end:

{

"role": "system",

"content": "Summarize this conversation as a trip plan with location, travel dates, interests, and budget in JSON format."}

Boom — now you’ve got clean, structured output ready to send to a booking API or dashboard.

💡 Why the Chat Format Changes the Game

The chat format does more than enable casual conversations. It lets you build context-aware systems that can:

- Ask follow-ups and collect info step-by-step

- Adapt personality and tone dynamically

- Handle state without a backend memory store

- Generate structured data from natural conversations

It’s not just a prompt format — it’s a conversational framework for intelligent apps.

Whether you’re designing a travel concierge, AI coach, tutor, or internal support tool, the chat format gives you all the power of a reactive assistant — with none of the traditional complexity.

🚀 Wrapping Up: Why Prompt Engineering Is the Skill of the AI Era

We’re at the start of something big — not just a shift in tools, but a full-on revolution in how we think, build, and communicate. AI isn’t just another layer in the tech stack. It’s a new interface, a new abstraction layer — and prompt engineering is how we interact with it.

Learning to write better prompts isn’t a niche skill. It’s a multiplier. It amplifies your creativity, your productivity, and your technical ability. It lets you:

- Prototype faster

- Automate smarter

- Communicate clearer

- Scale solutions across teams and languages and time zones

Whether you’re a developer, designer, marketer, founder, or curious technologist, prompting is the bridge between human intent and machine action. And unlike most revolutions in tech, this one doesn’t require a PhD or a decade of experience. It just takes practice, clarity, and a bit of curiosity.

Software enthusiast with a passion for AI, edge computing, and building intelligent SaaS solutions. Experienced in cloud computing and infrastructure, with a track record of contributing to multiple tech companies in Silicon Valley. Always exploring how emerging technologies can drive real-world impact, from the cloud to the edge.